|

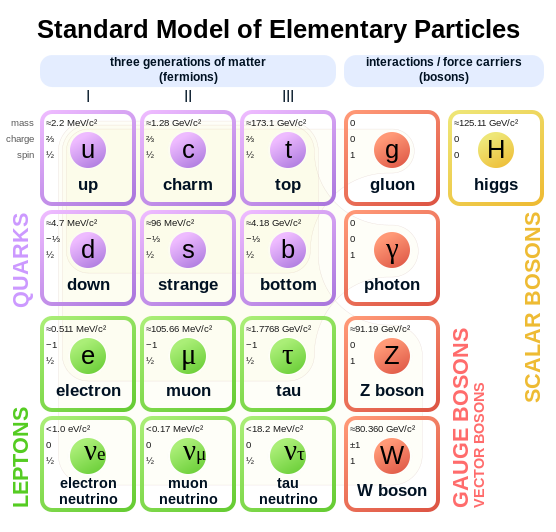

| Particles in the Standard Model (from Wikipedia) |

Particle physics is interesting to me for another reason.

To me it represents what's still right about science... let me explain.

In grade school in the 1960's we all grew up with the "atomic" model of matter: protons, electrons and neutrons - electrons spinning around little a nucleus like planets around the sun. Of course, at higher levels of education there was much more: strong and weak forces, electromagnetism, and others. There were also many kinds of experiments being conducted with exotic technologies like "cloud chambers," atom smashers, and so on.

All of these things produced data which physicists had to explain. Why was there a weak force, why was there electromagnetism, and so on.

To do this, starting around 1960, a theory, known today as the "Standard Model" was developed.

The idea was that there was a theoretical set of "particles" that interacted with each other in predictable ways - mostly by a notion of "exchanging particles." Along with the "theory" are detailed mathematical descriptions of the particles and their behaviors.

The last particle predicted but not yet observed by this theory is the "Higgs Boson."

So the "physics community" built the Large Hadron Collider (LHC), at a cost of some $10 billion USD or so in order to have a device that could detect one. (The Higgs Boson requires an enormous amount to observe and no existing device available to physicists was suitable.)

Now physicists are not like climate scientists (or doctors or medical scientists for that matter) in that they like to believe that their equations are accurate. This means that the results of these LHC experiments much have a high degree of certainty that what is being observed, the Higgs Boson in this case, is not some random other event.

To measure this certainty physicists use something called a sigma derived from the notion of standard deviation.

Basically this is a way to determine what your confidence level is of your measurement.

In the case of the LHC they like to see at least a sigma 5 (which would mean something like a confidence level of 0.9999994).

Now the equations and the Standard Model don't necessarily "explain" physics as we know it today, but the do offer an accurate (sigma 5) and predictive model based on observation. (For example, I wrote about the "The End of Certainty" last post which offers insight into completely different ways of thinking about physics.)

So for me the question becomes why is something like "climate science" (or medical science) held to these same rigorous standards?

In that last post I mentioned this Nature article. It talks about global ocean temperature.

Basically the article talks about data sets, adjustments to data sets, attempts to compare various mathematical climate models, and talks about matching this to observed data.

Sadly you don't find much related to confidence levels or sigmas.

The reason, of course, is simple. There is no equivalent "Standard Climate Model" that matches (in concept) the physics "Standard Model."

Now remember that the physics "Standard Model" is simply a set of mathematical tools that matches, with a high degree of certainty, the real world. Its does not "explain" the real world (and in fact there are many things in physics, like the two-slit experiment, which seem quite unexplainable given our current knowledge base) but merely offers accurate tools to predict it at a certain level.

In climate science there isn't any such predictive tools or theory.

If climate science had such a tool it would be able to predict rainfall or temperature to withing a few hundredths of an inch or degree.

The excuse is that climate science "projects" what the climate will do, not predict it (see this in Scientific American).

To my mind this is like Phlogiston theory from the 1600's or aether theories of the 1900's.

Both of these theories "projected" reasons for things: phlogiston for combustion, claiming that there was a "fire element" contained in combustible matter that was released by burning and aether for transmission of light (claiming there was a substance that permeated the universe through which light traveled).

People believed these theories and to some extent they created reliable predictions for a while - at least until they were disproved by experiment.

Physicists learned from these theories. They learned that accuracy, repeatability and predictability where important when making predictions.

While they could "project" that the aether was bearing the light from point A to point B it turned out that their projections were wrong.

So aside from the other criticisms of "climate science" there is also the notion of "projection" versus "prediction."

(Now I don't believe that the Standard Model is the be all end all of physics - there are issues with it and, as I have stated, it does not offer explanations for many things. So clearly more science is required. But our modern world, replete with cameras, computers, cell phones, and so on relies on the fundamental predictability of the mathematics of the Standard Model.)

The Standard Model is, then, I guess simply a tool.

And so is "climate science."

The problem then boils down to which tools is sharper?

The one that can cause the CCD in your 8-megapixel phone to take a picture (how often when you push the button does the phone fail to work?) or the one that cannot accurately predict tomorrow's weather? (I suppose "climate science" is in that sense like Astrology - which could predict "Venus Rising in the Seventh House" at some point in the future (or whatever) without the knowledge of calculus or Keplers laws. Perhaps we should all revert to Astrology...?)

To me today's "climate science" is simply the phlogiston theory of 400 years ago.

Physics has learned a lot in that time, perhaps the same knowledge and tools should be applied to "climate science."

Don't you think that if "climate science" could build their own equivalent of the LHC and prove itself correct they would already have done so? After all it would only cost $10 billion USD.

No comments:

Post a Comment